Abstract

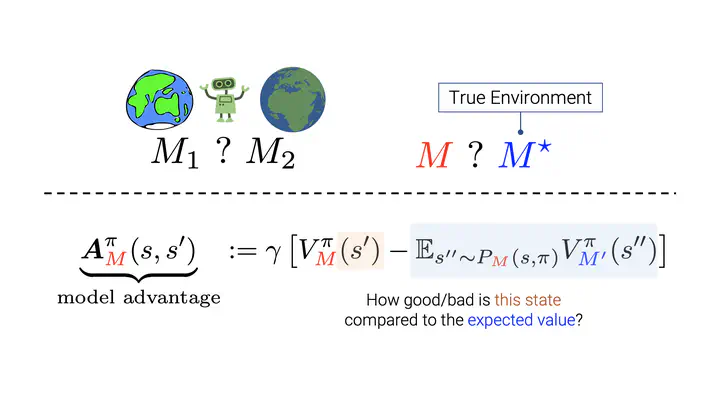

Despite the breakthroughs achieved by Reinforcement Learning (RL) in recent years, RL agents often fail to perform well in unseen environments. This inability to generalize to new environments prevents their deployment in the real world. To help measure this gap in performance, we introduce model-advantage - a quantity similar to the well-known (policy) advantage function. First, we show relationships between the proposed model-advantage and generalization in RL — using which we provide guarantees on the gap in performance of an agent in new environments. Further, we conduct toy experiments to show that even a sub-optimal policy (learnt with minimal interactions with the target environment) can help predict if a training environment (say, a simulator) helps learn policies that generalize. We then show connections with Model Based RL.